The release of ChatGPT by OpenAI has catalyzed a “Generative AI” storm in the tech industry. This includes:

- Microsoft’s investment in OpenAI has made headlines as a potential challenger to Google’s monopoly in search.

- The recent re-release of Microsoft’s AI-boosted search engine Bing to one million users has also raised alarms around misinformation.

- VCs have increased investment in Generative AI by 425% since 2020 to $2.1bn.

- “Tech-Twitter” has blown up and even mainstream media, whose job is under the biggest threat from such advancement, carried articles around the topic.

As investors at BGV, Human-centric AI has been the foundational core of our investment thesis around Enterprise 4.0. We have dug deeply into the challenges of building B2B AI businesses in our portfolio, as well as how disruptive venture-scale businesses can be built around Generative AI.

Recently we also put ChatGPT to the test on the topic of human-centric AI by asking two simple questions: What are the promises and perils of Human AI? And what are important innovations in Ethical AI? We then contrasted ChatGPT’s responses with what we have learned from subject matter experts on the same set of questions (Erik Brynjolffson in Daedelus (2022) titled “The Turing Trap: The Promise & Peril of Human-Like Artificial Intelligence” on the promises and perils of AI and Abhinav Ragunathan, who published a market map of Human Centric Ethical AI startups (EAIDB) in the EAIGG annual report (2022). This analysis combined with BGV investment thesis work led us to address four important questions on the topic Generative AI. We make the case that the timing for Generative AI is now, that Chat GPT will not replace search engines overnight, that it will spur startup innovation at the application layer and it will spur demand for guardrails to address trust and ethical AI concerns.

- Why Now

The confluence of the sharp decline in AI hardware and software costs combined with the maturation of key generative AI technologies leads us to believe that the time for innovation has already begun. Economic downturns are periods of rich creativity and innovation; research shows that over half of Fortune 500 companies were created in downturns. We believe that this period of innovation will be fueled by a wave of AI-led enterprises required to write the next chapter of Digital Transformation.

With rapid progress in transformer and diffusion models, we now have models that are trained on billions or even trillions of models. These models can extract connections and relationships between varied sources of content, enabling AI models to generate text and realistic images and videos that a human brain may find hard to distinguish from reality. These systems’ ability to generalize as well as preserve details provides an order of magnitude improvement over the “search-query and show relevant links” model.

- Replacing Search Engines

ChatGPT represents a tremendous area of innovation, but it will not replace Google search engine overnight for a few reasons:

- The answers are generic, lack depth and are sometimes wrong. Before trusting ChatGPT responses implicitly, users will need to confirm the integrity and veracity of the information sources. Already, StackOverflow has banned ChatGPT, saying: “because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to our site and to users who are looking for correct answers.” Given the effort required to verify responses, ChatGPT’s chatbot is not prepared to penetrate enterprise (B2B) use cases.

- Putting aside accuracy, there is also the question of sustainable business models. While ChatGPT is free today and costs per billion inferences are falling sharply, running GPUs is expensive, so profitably competing at scale with Google search engine is difficult.

- Google will not stand still; they have already launched Bard with machine and imitation learning to “outscore” ChatGPT on conversational services. We’ve only seen the opening act in a much larger showdown.

- The recent release of Microsoft’s AI powered search engine has exposed key underlying issues in using ChatGPT as a search engine.

At first glance, the results look impressive, but they often lack the depth that you gain while talking to a subject matter expert.

(3) Emergence of Ethical Issues and Human in the Loop

We believe that Generative AI tools like ChatGPT can augment subject matter experts by automating repetitive tasks. However, we doubt they will displace them entirely in B2B use cases due to lack of domain-specific contextual knowledge and the need for trust and verification of underlying data sets.

Broader adoption of ChatGPT will spur an increased demand for authenticated, verifiable data. This will advance data integrity and verification solutions, alongside a number of other ethical AI issues such as privacy, fairness, and governance innovations.

The surge in Generative AI interest will quickly prompt demands to prioritize human values, ethics, and guardrails. Early indications of this are:

- The recent publication from Kathy Baxter and Paula Goldman from Salesforce “Generative AI: 5 Guidelines for Responsible Development” to usher in the development of trusted generative AI at Salesforce.

- Jesus Mantas from IBM also summarizes the underlying ethical issues in his article AI Ethics Human in the Loop: “…we need a broader systemic view…that considers the interconnection of humans and technology, and the behavior of such systems in the broadest sense. It’s not enough to make parts of a decision system fair…if we then leave a risk of manipulation in how humans interact with and use those algorithms’ outcomes to make decisions.”

- Sam Altman Founder of OpenAI: “ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness. it’s a mistake to be relying on it for anything important right now. it’s a preview of progress; we have lots of work to do on robustness and truthfulness.”

Moreover, the subsequent innovation prompted by the Generative AI boom and the need for trust— is poised to follow a curve similar to “ethics predecessors” like that of cybersecurity in the late-2000s and privacy in the late-2010s.

(4 ) Attractive investment areas for startups

Generative AI will unleash additional productivity gains in enterprises and will go well beyond chatbots to cover use cases like generating written and visual content, writing code (automation scripts) debugging, and managing and manipulating data. However, many of the first wave of generative AI startups will fail to build profitable venture scale B2B businesses unless they explicitly address the following three core barriers:

- Inherent trust and verification issues associated with generative AI

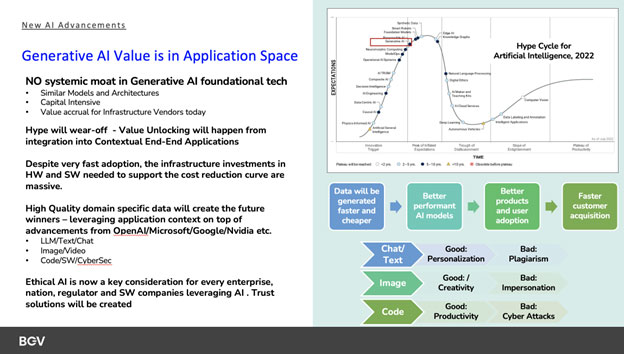

- Lack of defensible moats, with everyone relying on same underlying foundational models

- Lack of sustainable business models given the high costs of running generative AI infrastructure (GPUs)

It is unclear where in the stack most of the value will accrue – infrastructure, models, or apps. Currently, infrastructure providers (like NVIDIA) are the biggest benefactors of OpenAI. It is also unclear where startups can break the oligopoly of the infrastructure incumbents like Google, AWS, and Microsoft who touch everything, as explored in “Who Owns the Generative AI Platform?” an article published by a16z.

We believe that successful Generative AI B2B startups will fall into three categories:

- Applications that integrate generative AI models into user-facing sticky productivity apps. Using foundation models or proprietary models as a base to build on (verticals like media, gaming, design, copywriting etc. OR key enterprise functions like DevOps, marketing, customer support etc).

- Models to power the applications highlighted above verticalized models will be needed. Leveraging foundation models, using open-source checkpoints can yield productivity and a quicker path to monetization but may lack defensibility.

- Infrastructure to cost effectively run training and inference workloads for generative AI models by breaking the GPU cost curve. We will also see AI Governance solutions to address the unintended consequences of disinformation that will be created by broader adoption of tools like ChatGPT, as well as a wide range of ethical issues.

We believe that Generative AI is ushering in a novel computing model, one that turbocharges the way computers are programmed, the way applications are built, as well as the number of people that can actually put this new compute platform to work. In the case of workstations, the number of people who could put this computing model to work was measured in hundreds of thousands of people. For PCs it was measured in hundreds of millions. For mobile devices it was billions of people. The number of applications grew exponentially with each subsequent computing model.

With Large Language Models the number of productivity applications is going to grow exponentially because it will enable anyone and everyone to write their own applications. BGV therefore believes that many successful startups will be in the application layer. This is especially startups that make use of the democratization of generative AI, but take it to everyday workflows by using intelligent workflow automation and leveraging proprietary verticalized data sets to provide the most productivity improvements to end users.

We also believe there will be opportunities for startups to innovate at the hardware layer – to break the GPU cost curve, though these are likely to be more capital-intensive investments.

Along with the opportunities for value creation there are several downside risks associated with Generative AI including:

- Text: while writing marketing text may be a force multiplier for martech solutions, potential plagiarism of original content may expose enterprises to liability.

- Images: while Generative AI can create marketing images at scale without needing product costs, the same models could create threats like human impersonation.

- Code: while regular snippets of code can be written automatically, the same code generation could be used to exploit vulnerabilities within enterprises.

Conclusion

Generative AI is poised to unleash a tremendous wave of innovation in human productivity in use cases like writing code, creating content, debugging, and managing/manipulating data, but It is unlikely to replace Google search overnight or displace human subject matter experts. Large enterprises will find it difficult to pull up an API and start using Generative AI in enterprise contexts without relying on startup technology innovation (specifically at the application layer for productivity use cases that augment human productivity via intelligent workflow automation and proprietary verticalized data sets). Broader adoption of Generative AI will also spur increased demand for guardrails and innovation. The tech stack of the future will address not only productivity issues, but ethical issues like privacy, fairness, and governance.